Story Highlight

– Over half a million non-fatal workplace injuries in UK.

– Traditional safety measures are inadequate for modern environments.

– AI can proactively identify risks invisible to humans.

– Effective AI design requires trust and clear communication.

– Automation will enhance, not replace, human safety oversight.

Full Story

Chris Coote from Dexory discusses the evolving role of artificial intelligence (AI) in enhancing safety standards across various high-risk sectors, not just in warehouses.

Despite strict regulations and safety protocols, workplace accidents continue to be an alarming reality. The latest statistics from the UK’s Health and Safety Executive reveal that more than half a million non-fatal injuries were recorded last year. Specifically, Amazon’s UK fulfilment centres reported 119 serious incidents along with over 1,400 ambulance callouts from 2019 to 2024. These figures highlight a pressing issue: traditional health and safety practices have become insufficient.

Conventional methods, including checklists and personal protective equipment (PPE), were developed for simpler operational frameworks. However, today’s expansive warehouses and rapid production lines present challenges that reactive methods can no longer adequately address. Implementing compliance checks only after incidents occur may fulfill regulatory requirements, yet they do little to avert harm. To genuinely protect workers, a transformative shift towards proactive, prescriptive, and predictive health and safety strategies is essential.

Many industrial environments reveal safety practices that seem disconnected from current technological advancements. Risk assessments are frequently performed annually, while inspections rely heavily on human observation, often under time constraints. Reporting systems typically depend on workers documenting issues long after they’ve occurred. While these processes aim to cultivate accountability, they often foster a culture of minimal compliance.

This reticence to innovate stems largely from framing safety as a mere obligation rather than an opportunity for improvement. Other areas within industrial operations have leveraged technological advancements—such as robotics and supply chain innovation—so it begs the question: why has safety not progressed similarly?

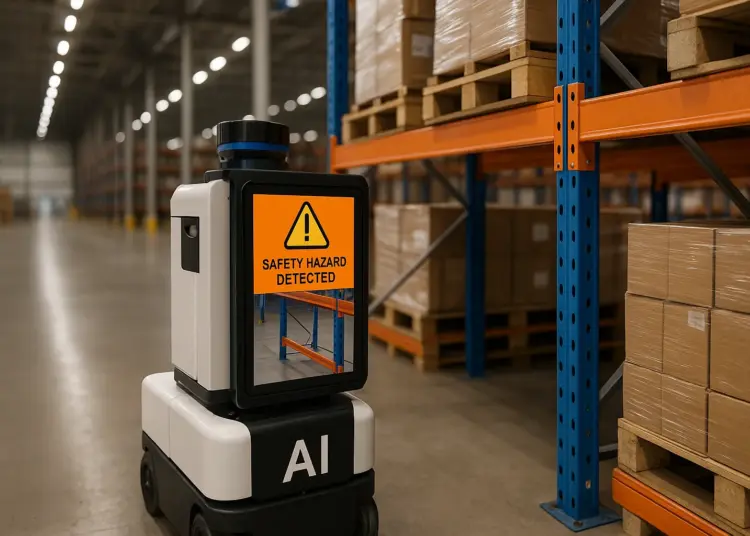

AI technology is beginning to alter this landscape substantially. Unlike human inspectors, AI systems can analyze vast amounts of data continuously without fatigue. Advanced robots fitted with sophisticated sensors and vision systems can scan facilities in real-time, identifying risks that may not be detectable by the human eye.

For example, in warehouses, structural defects like beam fractures or hazardous loading configurations can go undetected during manual inspections. However, computer vision technology can identify these issues instantaneously. Similarly, other technologies can foresee structural problems in key infrastructure, such as bridges and machinery, well before they pose a risk. These methods are not just theoretical; they are actively being integrated into operational practices today.

The potential impacts of this shift in approach are profound. By responding to early warning signals, organisations can engage in proactive maintenance and adjust workflows to prevent accidents. This not only leads to lower insurance costs and minimized operational downtime but crucially, enhances worker safety.

Nonetheless, identifying risks through AI is only part of the equation. Ensuring that personnel trust and act upon AI-generated alerts presents a significant hurdle. Many industry workers may disregard warnings due to their frequency, inconvenience, or lack of clarity.

As such, integrating AI in high-risk environments requires a focus on user design; the technology must cater to the needs of workers. Effective AI systems communicate insights in clear, straightforward language, contextualising information to drive better responses. For instance, a direct notification indicating that “This beam may fail within six months unless repaired” is far more actionable than a vague alert.

Moreover, it is vital for organisations to validate the technology to build trust. When employees see consistent alignment between AI predictions and real-world outcomes, such as damaged racks confirmed by engineers, faith in the system grows. Over time, AI can evolve from a mere tool to a reliable partner in enhancing workplace safety.

The future of health and safety is poised for greater automation as AI becomes more sophisticated. Routine evaluations—be it of safety equipment or site conditions—are likely to be undertaken by autonomous systems. Incident documentation will transition from manual entries to real-time data generated by sensors, enabling safety personnel to spend more time acting on insights rather than managing paperwork.

While there are concerns regarding potential job losses in the safety sector, it is essential to recognise that the human element—making judgments, ethical decisions, and leading safety culture—will remain crucial. Instead of replacing jobs, AI will alleviate workers from repetitive tasks, allowing greater focus on safeguarding their colleagues.

The evolution of health and safety is apace. It extends beyond mere compliance; it embodies respect and care for those ensuring the smooth operation of industries. Although traditional methods have proven effective, stagnation in injury reduction signals their limitations.

By adopting advanced technologies that promote proactive safety measures, businesses can embed safety into the core of their operational frameworks, rather than relegating it to an afterthought. Companies that excel in this transition will not only lower incident rates but will also define new benchmarks for safe and responsible work in the modern era.

Chris Coote is Director of Product at Dexory.

AI offers powerful tools to spot patterns and hazards that are easy to miss during routine checks, and moving from reactive to proactive safety management could cut incidents and improve outcomes. Success will depend on ensuring AI outputs are transparent and reliable so the workforce trusts alerts and knows how to act on them. Technology should augment skilled safety professionals and site teams, not replace them, so investment in training, clear escalation paths and practical field validation of AI findings is essential.

I agree that AI offers powerful tools to shift safety from reactive to proactive. Machine learning can spot patterns and near misses that humans might overlook and support better allocation of resources to where risk is greatest. Practical success will hinge on reliable data, transparent algorithms and clear, actionable alerting so frontline teams trust and can act on the outputs. Equally important is investing in training and engagement so workers understand how AI supports decisions and know when to escalate. Technology should augment human judgement, not replace it, and governance and maintenance of AI systems must be treated as an ongoing safety activity.

This is a timely point. AI can add real value by spotting patterns and near misses that manual checks miss, helping to prevent incidents rather than just record them. Practical deployment will hinge on clear validation of models, integration with existing reporting systems, and straightforward alerting that frontline staff trust and understand. Keep the human in the loop for judgement, escalation and continuous improvement, and focus on training, data quality and governance to make AI a genuine safety tool rather than a black box.

AI offers powerful tools to spot patterns and emerging risks that are often missed in routine inspections, especially in complex or rapidly changing operations. For practical impact organisations must focus on quality data, clear alerting that workers understand and trust, and training that integrates AI insights into existing safety decision making. Technology is most effective when it supports experienced people rather than trying to replace them, and governance around validation, explainability and accountability will determine whether AI actually reduces harm or just shifts liability.