Story Highlight

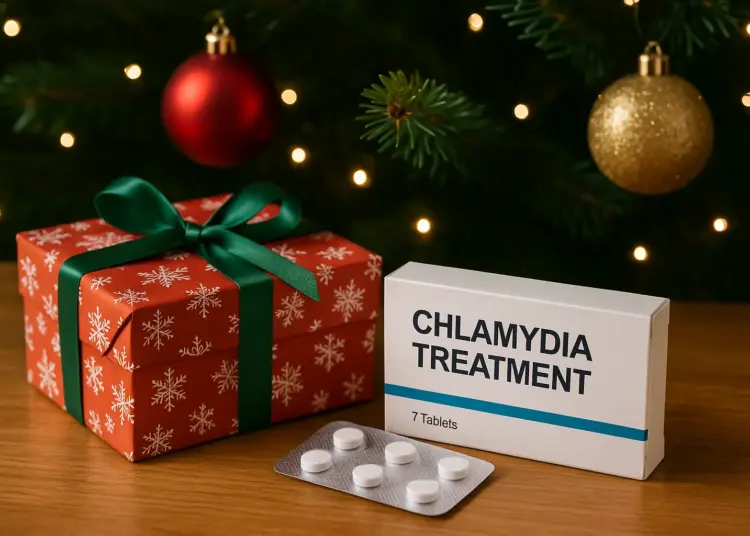

– STIs expected to surge during the festive season.

– Chlamydia treatment demand rose 81% post-Christmas.

– Many turn to AI for sexual health advice.

– AI chatbots provide inaccurate sexual health information.

– Experts urge consulting qualified professionals for care.

Full Story

As the festive season approaches, health professionals are urging individuals to remain vigilant about their sexual health, particularly as the incidence of sexually transmitted infections (STIs) is anticipated to rise during this period. A trend observed in retail data indicates that many consumers often seek treatment for sexual health complications shortly after Christmas, with supermarket giant ASDA noting an 81 per cent increase in chlamydia treatment inquiries during the first week of January 2025 compared to the preceding month.

A representative from ASDA stated, “we are consistently experiencing a peak in interest for this treatment following the festive period.” This trend is corroborated by data which shows that demand for chlamydia treatment surged by 58 per cent between the first week of December 2023 and the first week of January 2024, underscoring the correlation between holiday festivities and sexual health issues.

Moreover, the rise of artificial intelligence (AI) chatbots has raised concerns regarding their use as a resource for sexual health guidance. Experts are cautioning people not to rely on these digital platforms for medical advice, encouraging instead that individuals consult trained healthcare professionals who can provide accurate and safe recommendations.

Research has previously indicated that women’s sexual activity tends to peak around New Year’s, surpassing even that of Valentine’s Day, which may contribute to the noticeable increase in birthrates in September. This seasonal surge in sexual activity can bring about reproductive health concerns, including STIs, necessitating careful health considerations during holiday celebrations.

In light of the growing popularity of AI chatbots like ChatGPT and Google’s Gemini, which are often consulted for advice on sensitive issues such as sexual health, experts warn that users may receive potentially unsafe or misleading information. Recent surveys reveal that nearly two in five individuals in the UK aged over 16, or 38 per cent, have sought sexual health advice from AI. This trend is particularly notable among younger demographics, with 65 per cent of individuals aged 25 to 34 and 52 per cent of those between 35 and 44 turning to AI for such inquiries. Among the younger generation, an impressive 41 per cent of 16 to 24-year-olds also utilise AI platforms for sexual health guidance.

Data indicates a significant gender disparity in the use of AI for health advice, with 45 per cent of men compared to 33 per cent of women relying on these digital tools. This aligns with insights from the Office for National Statistics Health Insight survey, which suggests that men are generally less inclined to seek assistance directly from healthcare providers.

In a concerning revelation, research commissioned by ASDA’s Online Doctor service uncovered alarming inconsistencies in the advice provided by AI chatbots regarding sexual health. When researchers queried ChatGPT, Gemini, and its advanced version Gemini 3 about various sexual health topics, they noted that only 60 out of 78 responses were factually correct. In some instances, notably concerning treatments for conditions such as chlamydia and bacterial vaginosis, the feedback was incomplete or misleading.

The accuracy of responses varied among AI platforms, with the assessments being as follows:

– ChatGPT: 19 accurate responses, 7 inaccurate

– Gemini: 22 accurate responses, 4 inaccurate

– Gemini 3: 19 accurate responses, 7 inaccurate

Dr Zara Haider, president of the College of Sexual and Reproductive Healthcare, commented on the seasonal uptick in STI-related concerns by stating, “It is well recognised by sexual health services that demand often increases in the weeks following periods of increased socialising and sexual contact, such as Christmas and New Year.” She emphasised that while AI chatbots are readily accessible, they should not replace traditional medical assessment, testing, and treatment. Dr Haider warned that symptoms of STIs can often be subtle or absent altogether, asserting that interactions with AI could lead to misinterpretations, unfounded reassurances, and delayed care.

Furthermore, while AI platforms like Gemini and ChatGPT have included disclaimers about their limitations in offering medical guidance, experts believe that these disclaimers, particularly in the latest Gemini 3 model, are vital. Martin Jeffrey, an AI expert and founder of SEO service Harton Works, welcomed the enhanced disclaimer but cautioned that the calm, coaching tone of the chatbot may create a misleading sense of reliability. He noted, “The bigger concern is that a high percentage of people still look for health information on platforms where there is no meaningful warning at all.”

An NHS spokesperson reiterated the commitment to leveraging innovative technology to improve healthcare outcomes. “The NHS is committed to using cutting-edge technology to improve patient care, with AI already helping to speed up diagnoses, analyse test results and cut red tape across health services,” they stated. However, the spokesperson emphasised that AI chatbots should not be a substitute for qualified, clinical advice.

OpenAI, the organisation behind ChatGPT, reiterated that their platform is not designed to replace trained clinicians, yet acknowledged the need for the tool to provide accessible information to help users understand their health concerns. They noted their collaboration with health experts to enhance safety and reliability of health information.

As the festive season nears, the need for attention to sexual health remains paramount. Experts stress the importance of consulting reputable health sources and seeking the support of qualified professionals to ensure safe practices and informed decision-making regarding sexual health during this busy time of year.

Our Thoughts

The surge in sexually transmitted infections (STIs) following the festive period highlights several key safety lessons relevant to UK health and safety legislation. Firstly, individuals often seek inaccurate information from AI chatbots rather than qualified medical professionals. This misstep could lead to improper treatment and worsen health outcomes. Heightened public awareness campaigns promoting direct communication with healthcare providers, especially during periods associated with increased sexual activity, could mitigate such risks.

Additionally, health services and relevant agencies must emphasize the importance of accurate sexual health advice, aligning with the Health and Safety at Work Act 1974, which mandates employers to ensure the health and safety of employees and the public. The reliance on AI without sufficient disclaimers may breach principles of safe medical practice and patient care.

To prevent similar incidents, healthcare authorities should regulate the dissemination of health information from AI platforms, ensuring these tools include explicit warnings about their limitations. Enhanced partnerships between digital services and health organizations can facilitate accurate, accessible, and timely sexual health advice, reducing the likelihood of misinformation and subsequent health issues during social periods.