Story Highlight

– Over 60% of UK children use AI chat websites.

– Internet Matters warns of unmoderated AI interactions.

– AI chatbots can create addictive cycles for users.

– Concerns over AI interactions resembling grooming behaviors.

– Current regulations lag behind rapidly evolving technology.

Full Story

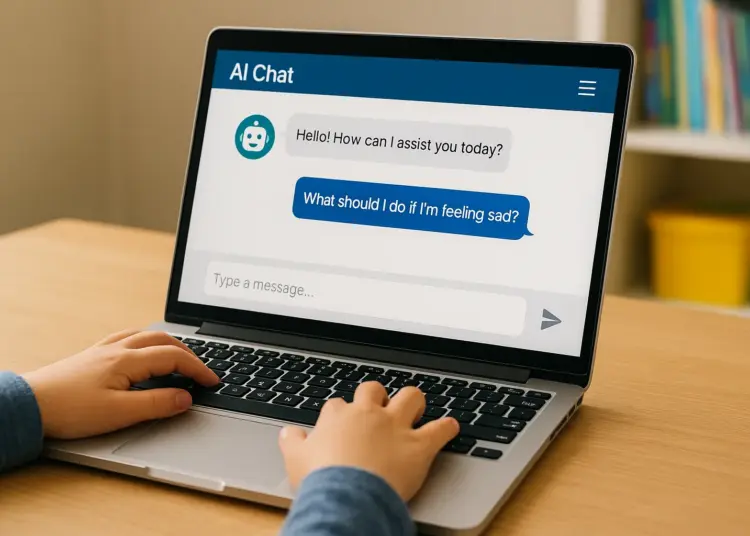

Concerns are mounting among cyber safety experts regarding the protection of children in the face of rapidly evolving Artificial Intelligence (AI) technology. Recent studies indicate that over 60% of children across the UK are currently engaging with AI chat platforms for various purposes, often without adequate awareness of the associated risks. Internet Matters, a charity dedicated to fostering safe online environments for young people, highlights the growing trend of children turning to AI for advice and companionship, with an alarming lack of moderation on these platforms.

In just a few short years, AI has transitioned from a concept primarily associated with robotics and advanced computing to a ubiquitous element in everyday life. The emergence of generative AI chat services, which provide tailored interactions with virtual characters, has raised significant concerns among parents, educators, and cyber safety advocates. These chatbots, such as ChatGPT, enable users to submit inquiries and receive automated replies, creating an environment where young people can seek guidance on a multitude of topics.

Daniel, a student in Aberdeen, shared his personal experience with AI chatbots, describing them as “addictive.” He mentioned, “If something bad were happening to me, I would go to ChatGPT and try to find an answer. It’s like, I keep searching, and I feel like AI really perpetuates that.” He further noted the persistent prompts and questions that chatbots present, which can lead to continuous engagement, making it difficult for users to disengage.

Similarly, a fellow student, Enya, revealed that she interacts with AI platforms nearly five times each day. “I can’t believe it’s been that many times since January already – and we’re coming up to the end of the year,” she remarked. “The cycle of it being addictive because it’s quick and easy is really true. The model having to ask you questions back can be really enticing as well.”

Microsoft’s AI CEO, Mustafa Suleyman, emphasized these concerns on social media, citing reports of ‘AI psychosis’—a phenomenon characterised by unhealthy attachments forming between humans and AI systems. Suleyman advocates for urgent protective measures to be implemented as the interaction with AI continues to intensify.

Mental Health UK recently released findings suggesting that over one-third of individuals use AI chatbots for mental health support, further underscoring the growing dependence on these technologies. The risk amplifies with generative AI’s ability to create believable, character-driven online interactions, a development that has some cyber security experts expressing deep alarm. Companion-style chatbots have gained considerable traction, with platforms like CharacterAI among the most frequented.

An expert in cyber safety, Annabel Turner, emphasised the potential dangers that these unmoderated interactions could pose to children and teenagers. “All of these characters are designed to behave in an enormously adult way,” she stated, warning that flirtation and boundary-pushing behaviours from AI characters can be indistinguishable from grooming in the eyes of a young user. “You can’t recognise it because it’s your favourite character,” she lamented.

Most generative AI websites lack robust age verification systems and rigorous moderation, allowing children to access content that is otherwise deemed inappropriate for their age. As Turner points out, many young users are drawn to these sites by advertisements or peer experiences, oblivious to the risks that such interactions entail. “They are going on these sites because they may see something advertised that piques their interest. They are not designed for children and young people, yet they’re very easy to access,” she explained.

The implications of these interactions could significantly affect a child’s mental health and perspective on relationships. Turner warned that these experiences can normalise harmful behaviours, potentially altering how children view their entitlement to respect and kindness, altering their fundamental worldviews.

The Online Safety Act, implemented in the UK this year, has been celebrated by advocacy groups as a meaningful step toward enhancing safeguards for vulnerable online users. However, critics argue that current regulations are struggling to keep pace with the rapid advancement of technology. “This is not going away – so, how do we support children’s development through their teenage years?” Turner asked, stressing the need for continued dialogue and action in this evolving landscape.

“We want products that are exciting and creative, that are good for children and young people – but this is not it,” Turner asserted. She urged parents and caregivers to remain vigilant and informed about the digital environment that their children inhabit. “These are spaces we desperately need to be aware of as parents and caregivers. We are not having a sufficient conversation about them. The landscape has completely changed,” she added.

As the conversation surrounding AI technology and child safety continues to unfold, experts are calling for greater awareness, active parental involvement, and rigorous regulatory measures. The challenge lies not merely in managing technology’s integration into our lives but ensuring that it is conducive to the healthy development and well-being of the next generation.

Our Thoughts

To prevent the risks associated with children using AI chat platforms, several measures could be implemented. Firstly, there should be stricter age verification processes on these sites to restrict access for underage users, aligning with the UK’s Online Safety Act. Additionally, implementing better moderation systems to monitor interactions could mitigate exposure to inappropriate content.

From a safety perspective, educating parents and caregivers about the potential dangers of AI interactions is crucial. They should be encouraged to discuss these platforms with their children and provide guidance on safe usage.

Furthermore, developers of AI chatbots should adhere to existing health and safety regulations by integrating mental health guidelines, ensuring that these platforms do not exacerbate issues such as addiction or unhealthy emotional attachments.

The lack of moderation and potential grooming-like interactions also highlights a breach of duty of care owed to young users. By prioritizing child safety in the design and operation of these platforms, incidents related to mental health harm can be significantly reduced. Establishing a proactive dialogue around AI’s impact on youth will foster safer environments for their engagement with technology.